Generative Fuzzy System for Sequence-to-Sequence Learning via Rule-based Inference

Published in IEEE Transactions on Neural Networks and Learning Systems, 2025

Hailong Yang, Zhaohong Deng, Wei Zhang, Zhuangzhuang Zhao, Guanjin Wang, Kup-sze Choi. TNNLS 2025 (SCI Area-1). https://doi.org/10.1109/TNNLS.2025.3615650

GenFS

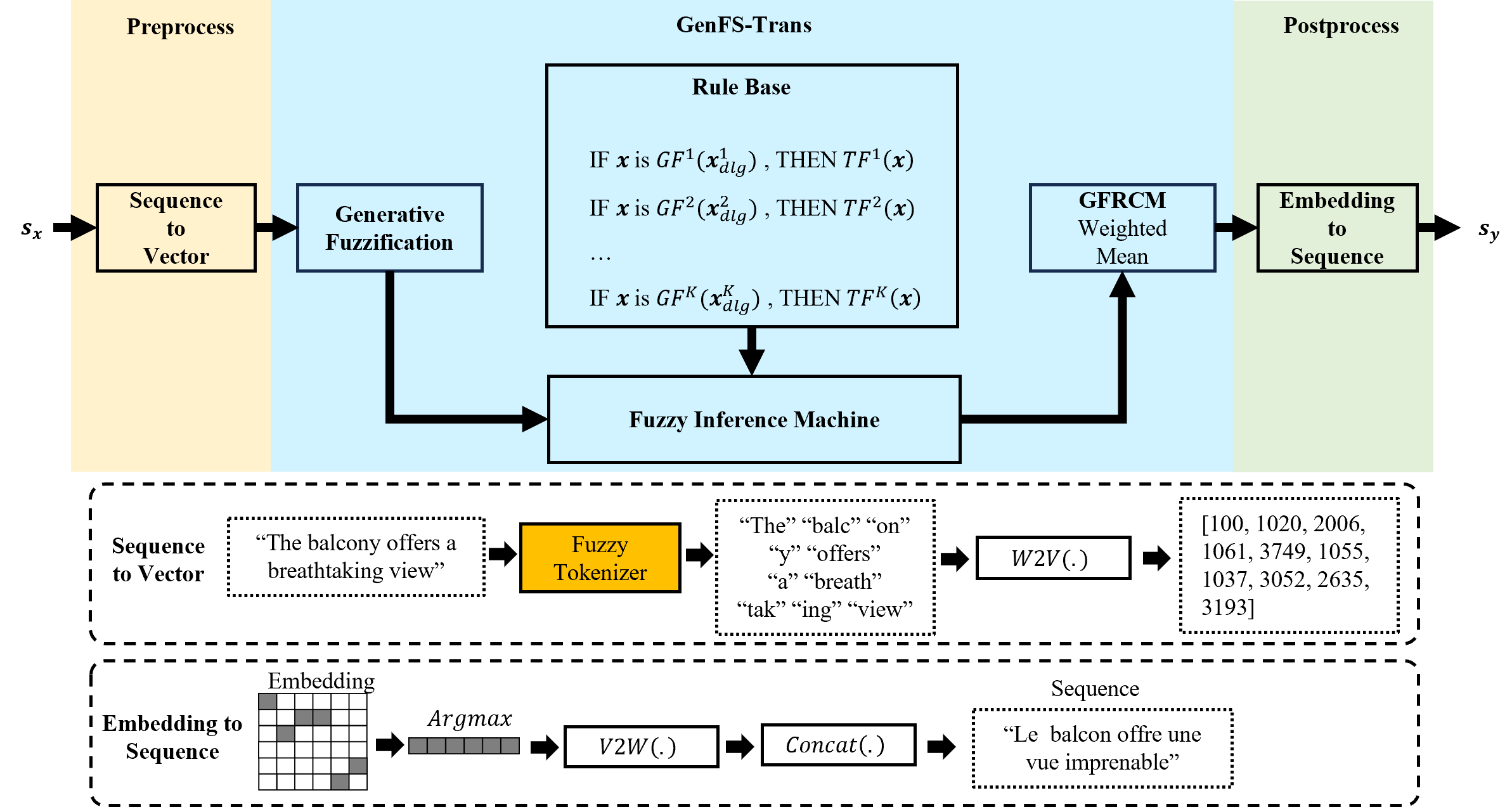

Generative Models (GMs), particularly Large Language Models (LLMs), have garnered significant attention in machine learning and artificial intelligence for their ability to generate new data by learning the statistical properties of training data and creating data that resemble the original data. This capability offers a wide range of applications across various domains. However, the complex structures and numerous model parameters of GMs obscure the input-output processes and complicate the understanding and control of the outputs. Moreover, the purely data-driven learning mechanism limits GMs’ abilities to acquire broader knowledge. There remains substantial potential for enhancing the robustness and generalization capabilities of GMs. In this work, we leverage fuzzy system, a classical modeling method, to combine both data-driven and knowledge-driven mechanisms for generative tasks. We propose a novel Generative Fuzzy System framework, named GenFS, which integrates the deep learning capabilities of GMs with the term-based interpretability and dual-driven mechanisms of fuzzy systems. Specifically, we propose an end-to-end GenFS-based model for sequence generation, called FuzzyS2S. A series of test studies were conducted on 12 datasets, covering three distinct categories of generative tasks: machine translation, code generation, and summary generation. The results demonstrate that FuzzyS2S outperforms the Transformer in terms of accuracy and fluency. Furthermore, it exhibits better performance than state-of-the-art models T5 and CodeT5 for some application scenarios.